How to Remove Background in ComfyUI?

Removing the background from images has a wide array of applications that can significantly enhance efficiency and creativity across various scenarios.

Here are some common use cases:

- Product Photography: Create a clean, uniform look for your products to make them more appealing in online stores or catalogs.

- Personal Photo Editing: Improve the quality of photos for social media or personal projects by removing cluttered backgrounds to highlight the subject.

- Design and Creative Projects: Whether it's designing posters, invitations, or website backgrounds, removing unwanted backgrounds can make your designs stand out and look more professional.

- Advertising and Marketing Materials: Create eye-catching marketing visuals, whether for online ads, flyers, or brochures, where a clear visual focus is key.

- Game and App Development: Create precise assets for characters and objects in games or apps without worrying about background interference, making development more efficient.

- Educational and Training Materials: When creating educational videos or online courses, clearing irrelevant backgrounds can help students focus more on the instructional content.

Many free online apps let you remove background of an image for free. But we will introduce 3 methods used in comfyui to remove background.

Rembg

Rembg is a tool to remove images background.

Models

The available models are:

- u2net (download, source): A pre-trained model for general use cases.

- u2netp (download, source): A lightweight version of u2net model.

- u2net_human_seg (download, source): A pre-trained model for human segmentation.

- u2net_cloth_seg (download, source): A pre-trained model for Cloths Parsing from human portrait. Here clothes are parsed into 3 category: Upper body, Lower body and Full body.

- silueta (download, source): Same as u2net but the size is reduced to 43Mb.

- isnet-general-use (download, source): A new pre-trained model for general use cases.

- isnet-anime (download, source): A high-accuracy segmentation for anime character.

- sam (download encoder, download decoder, source): A pre-trained model for any use cases.

download models from Project

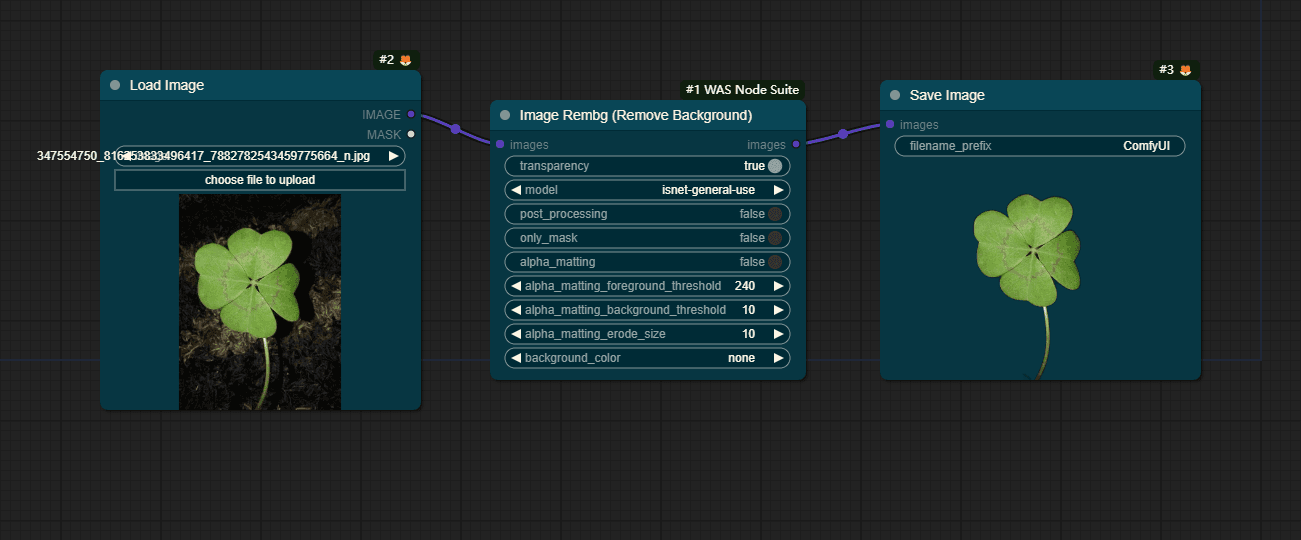

Use rembg with WAS Node Suite

WAS Node Suite is a node suite for ComfyUI with many new nodes, such as image processing, text processing, and more. Follow from Github WAS Node Suite

Image Rembg(Remove Background) node wrappered rembg models, you could use to remove background, a simple workflow:

Input Parameters

Required

- images (

IMAGE)- Description: The input images from which the background will be removed.

- transparency (

BOOLEAN)- Default:

true - Description: Specifies whether the output image should maintain any transparency.

- Default:

- model (

ENUM)- Options:

u2net,u2netp,u2net_human_seg,silueta,isnet-general-use,isnet-anime - Description: Selects the model to be used for background removal. Each model has its strengths, tailored to specific types of images or requirements.

- Options:

- post_processing (

BOOLEAN)- Default:

false - Description: Enables additional processing steps after the background has been removed, to refine the output image.

- Default:

- only_mask (

BOOLEAN)- Default:

false - Description: If enabled, the component outputs only the mask used for background removal, rather than the image itself.

- Default:

- alpha_matting (

BOOLEAN)- Default:

false - Description: Applies alpha matting to improve edge detail and transparency handling in the resulting image.

- Default:

- alpha_matting_foreground_threshold (

INT)- Default:

240 - Range:

0-255 - Description: Sets the threshold for determining the foreground in the context of alpha matting.

- Default:

- alpha_matting_background_threshold (

INT)- Default:

10 - Range:

0-255 - Description: Sets the threshold for determining the background in the context of alpha matting.

- Default:

- alpha_matting_erode_size (

INT)- Default:

10 - Range:

0-255 - Description: Determines the size of erosion applied during the alpha matting process.

- Default:

- background_color (

ENUM)- Options:

none,black,white,magenta,chroma green,chroma blue - Description: Allows setting a specific background color for the areas from which the background has been removed. This option is particularly useful when not maintaining transparency.

- Options:

Output

- Type:

IMAGE - Is List:

false - Name:

images - Description: The output of this component is the input image with the background removed, according to the specified parameters and selected model.

Category

- WAS Suite/Image/AI

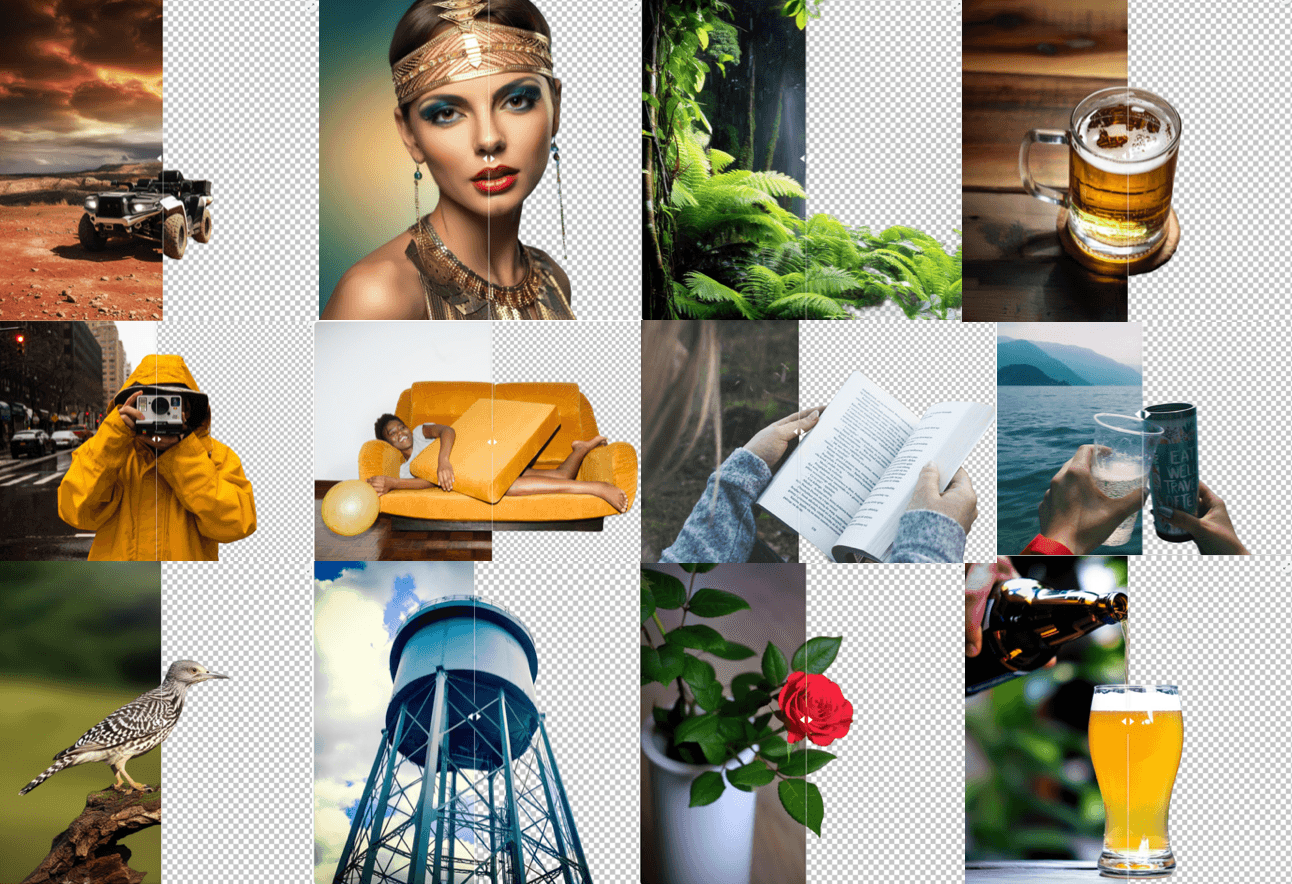

RMBG

RMBG v1.4 is our state-of-the-art background removal model, designed to effectively separate foreground from background in a range of categories and image types. This model has been trained on a carefully selected dataset, which includes: general stock images, e-commerce, gaming, and advertising content, making it suitable for commercial use cases powering enterprise content creation at scale. The accuracy, efficiency, and versatility currently rival leading source-available models. It is ideal where content safety, legally licensed datasets, and bias mitigation are paramount.

Developed by BRIA AI, RMBG v1.4 is available as a source-available model for non-commercial use.

Models

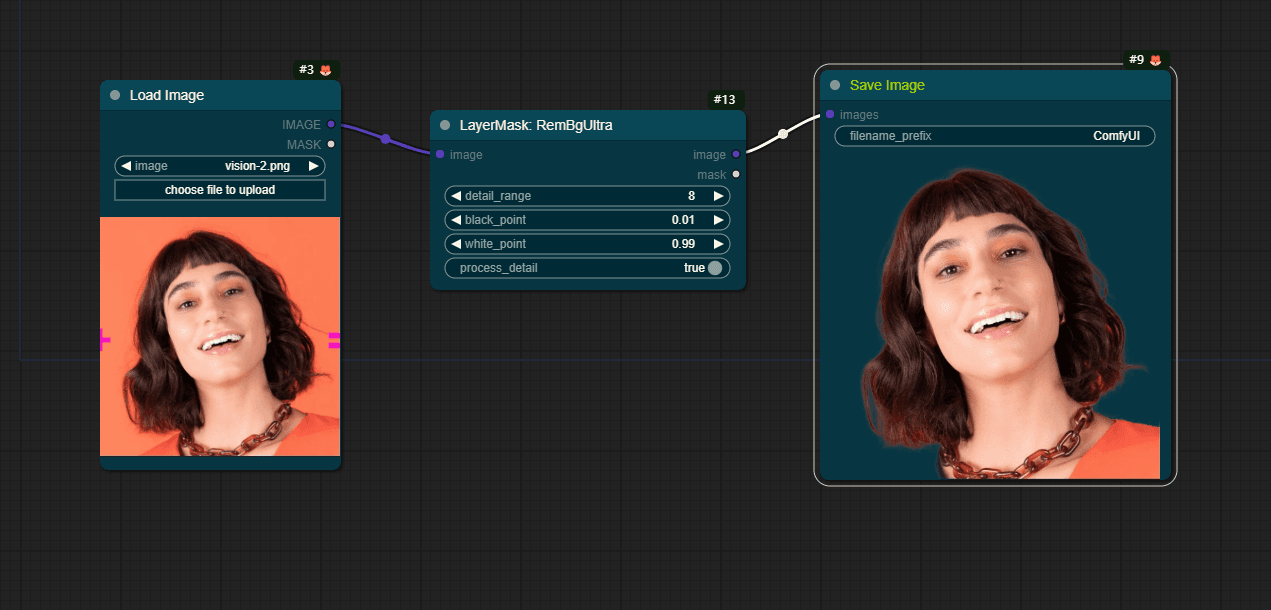

Use RMBG with LayerMask

RemBgUltra combines the Alpha Matte node of Spacepxl's ComfyUI-Image-Filters and the functionality of ZHO-ZHO-ZHO's ComfyUI-BRIA_AI-RMBG.

Input Parameters

Required

- image (

IMAGE)- Description: The input image from which the background will be removed.

- detail_range (

INT)- Default:

8 - Range:

0-256 - Step:

1 - Description: Adjusts the range of detail to be preserved or removed in the background removal process. A higher value retains more fine details.

- Default:

- black_point (

FLOAT)- Default:

0.01 - Range:

0.01-0.98 - Step:

0.01 - Description: Sets the black point for the dynamic range adjustment, influencing the darkest parts of the image.

- Default:

- white_point (

FLOAT)- Default:

0.99 - Range:

0.02-0.99 - Step:

0.01 - Description: Sets the white point for the dynamic range adjustment, affecting the brightest parts of the image.

- Default:

- process_detail (

BOOLEAN)- Default:

true - Description: Determines whether the component should process and retain fine details in the image during the background removal.

- Default:

Output Parameters

- Type:

IMAGE,MASK - Is List:

false,false - Name:

image,mask - Description: The output includes the processed image with the background removed and an optional mask that indicates areas of the image affected by the removal process.

Category

- 🚚dzNodes/LayerMask

Segment Anything

Models

The Segment Anything Model (SAM) produces high quality object masks from input prompts such as points or boxes, and it can be used to generate masks for all objects in an image. It has been trained on a dataset of 11 million images and 1.1 billion masks, and has strong zero-shot performance on a variety of segmentation tasks.

| Filename | Size |

|---|---|

| efficientsam_s.pth | 106 MB |

| efficientsam_ti.pth | 41 MB |

| mobile_sam.pth | 40.7 MB |

| sam_vit_b_01ec64.pth | 375 MB |

| sam_vit_h_4b8939.pth | 2.56 GB |

| sam_vit_l_0b3195.pth | 1.25 GB |

Use SAM with comfyui_segment_anything

This project is a ComfyUI version of https://github.com/continue-revolution/sd-webui-segment-anything. At present, only the most core functionalities have been implemented. I would like to express my gratitude to continue-revolution for their preceding work on which this is based.

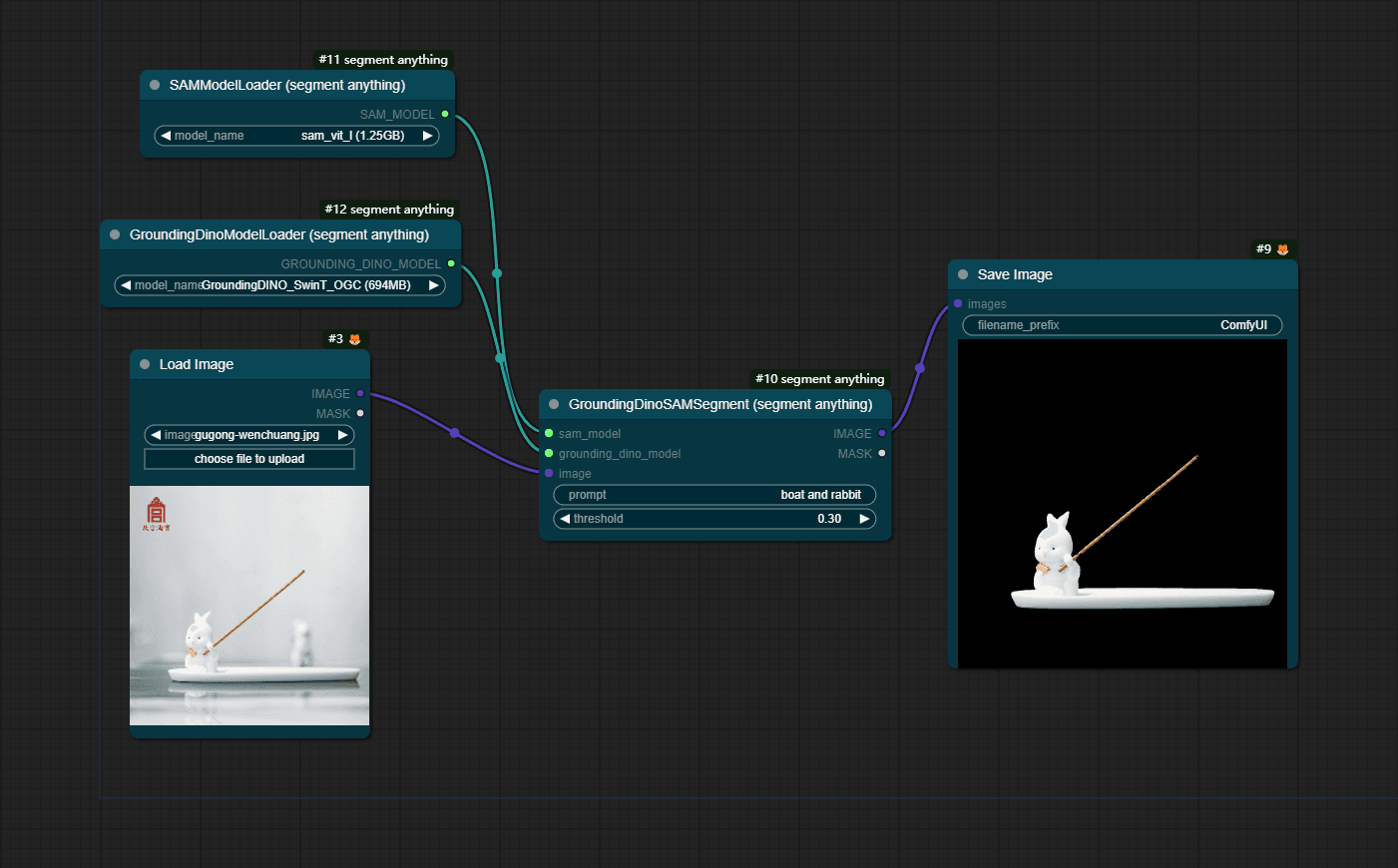

the workflow includes SAMModelLoader, GroundingDinoModelLoader and GroundingDinoSAMSegment, and you should provide prompt parameter with GroundingDinoSAMSegment.

Input Parameters

Required

- sam_model (

SAM_MODEL)- Description: Specifies the SAM model to be used for understanding and segmenting the image based on semantic attention.

- grounding_dino_model (

GROUNDING_DINO_MODEL)- Description: Specifies the DINO model to be used for distilling and refining the segmentation process without the need for labeled data.

- image (

IMAGE)- Description: The input image to be segmented according to the given prompt.

- prompt (

STRING)- Description: The textual prompt that guides the segmentation process, allowing for flexible and dynamic segmentation based on natural language inputs.

- threshold (

FLOAT)- Default:

0.3 - Range:

0-1.0 - Step:

0.01 - Description: The threshold value for determining the segmentation boundary. Adjusting this value can refine or expand the segmented area, offering control over the segmentation sensitivity.

- Default:

Output Parameters

- Type:

IMAGE,MASK - Is List:

false,false - Name:

IMAGE,MASK - Description: The output includes the segmented image and a corresponding mask that highlights the segmented area. The mask can be used for further image processing or analysis.

Category

- segment_anything

Find more custom nodes of ComfyFlow, follow ComfyFlow Custom nodes

Find more models of ComfyFlow, follow Common Models